I was recently asked to comment on a LinkedIn post regarding deepfakes. After submitting my knee jerk reaction comment, I started to think about it more. Although deepfakes show promise for many applications, they also pose risks that need to be addressed. What exactly are deepfakes? They are highly realistic digital manipulations of audio, video or still images created by training AI models. The results can be startlingly convincing, with the synthetic media able to depict words and actions that never actually occurred. I have a few examples posted in this article but most of them are old and even... read more

The risks and rewards of deepfake tech

I was recently asked to comment on a LinkedIn post regarding deepfakes. After submitting my knee jerk reaction comment, I started to think about it more. Although deepfakes show promise for many applications, they also pose risks that need to be addressed. What exactly are deepfakes? They are highly realistic digital manipulations of audio, video or still images created by training AI models. The results can be startlingly convincing, with the synthetic media able to depict words and actions that never actually occurred. I have a few examples posted in this article but most of them are old and even with the older tech the results are…scary.

At first deepfakes will be shown using famous people, because it is easier to get the data to train your model from someone who is all over youtube. By ingesting large quantities of video and audio the model can produce a very realistic video where the subject looks, talks, and moves like the real person. As time moves on I believe the amount of data you will need to have a compelling deepfake will be less and this is concerning.

Right now, most people that know about it are not as worried about the implications because they are thinking in terms of their own personal life. Similar to those that argued the Patriot Act was not a big deal, because “I have nothing to hide”. Those that are not worried about deepfakes impacting them, think that way because “I am not out there online enough for someone to create a deepfake off of me”.

Here is an example of a deepfake that is three years old.

An example of a Morgan Freeman deepfake that is two years old

Here is an article from the Department of Homeland Security regarding the increasing threat or deepfakes.

In this article we will cover some of the risks and rewards of deepfake technology and as always we welcome your feedback.

Potential Benefits of Deepfakes

- More immersive augmented and virtual reality experiences using realistic digital avatars and synthetic voices. This could bring secondary characters to life in new ways.

- AI and machine learning models, especially for computer vision and natural language processing, could be trained more robustly on large datasets of synthetic media.

- Visual effects for movies and entertainment could utilize deepfake technology to cut costs. For example, using a synthetic replica of an actor for dangerous stunts or expensive scenes.

- Deceased actors or public figures could be digitally revived and their likenesses used for new performances and content. This could allow beloved figures to appear in future films or educational content.

Concerning Risks of Deepfakes

- Individuals or political groups could leverage deepfakes to spread misinformation by manipulating audio or video of public figures to damage reputations or undermine institutions. Even crude edits could erode public trust.

- Synthetic media could artificially manipulate stock prices and valuations of companies if recordings emerge of falsified announcements, earnings calls or resignations. Insider trading based on deepfakes poses a threat.

- Harassment, bullying and abuse could escalate as disgruntled individuals create embarrassing or offensive deepfakes of acquaintances and share them online. Victims may have little recourse.

- Faked intimate media and “revenge porn” could be used to exploit or blackmail individuals with realistic synthesized video/audio depicting them in compromised situations.

- Deepfakes pose a fundamental challenge to the authenticity of any media. This “liar’s dividend” could cripple efforts to document facts and combat misinformation in an increasingly polarized information landscape.

Common ideas regarding risk mitigation

- Developing advanced detection systems and digital forensics to identify manipulated media. Researchers are already making progress on techniques to authenticate media and flag deepfakes.

- Educating the public to be more skeptical of video/audio recordings that cannot be verified, especially on social media. Media literacy initiatives are important.

- Enacting laws and policies to make creating and distributing malicious deepfakes illegal, while allowing positive applications like education and entertainment. A comprehensive governance framework is needed.

- Giving individuals meaningful control over and consent for any synthetic media depicting them. This respects personal autonomy and privacy.

Why I think it’s BS

When I was younger I frequented at 2600 meetups, the 2600 is a quarterly publication called “the hackers quarterly” and it is a wonderful community. Some of them ethical, some of them not so much. One of our experiments was to go into Harvard Square and give people a five dollar gift card to Dunkin Donuts if they would take a verbal survey with us. The survey asked a bunch of questions but a few of these questions were security related, like “name of your first pet, name of your best friend, what year you graduated highschool” etc. People were willing to give a lot of information for a few dollars worth of coffee or donuts.

I bring that example up because not too long ago people were asked to post pictures of their face from when they were young and another current picture. There was no monetary incentive, it was just a “challenge” that you participated in. I can guarantee that in the future there will be a set of phrases and words that will make it very easy for someone to replicate how you speak. There will be another “challenge” where people are asked to read this crazy excerpt and try to keep a straight face or some variation. This will be the data that bad actors will need to imitate your voice.

Many of our problems stem from the lack of self reflection and consideration when we are faced with a decision. Our society is quick to move towards reward without thinking of the risks it will impose. This can apply to answering a few seemingly harmless questions for a gift card to deciding to work on an AI project that has serious ramifications. When I see the benefits of deepfake, there is nothing in there that seems “good enough” to warrant the risks that come along with it. The mitigation tactics described above come from some smart individuals and groups, but they are also the same tools that bad actors will test against in order to bypass the safeguards.

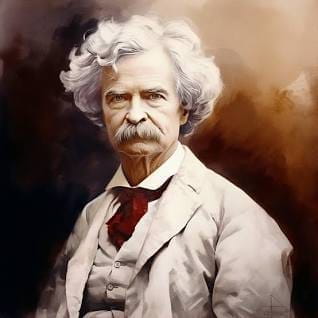

“A lie can travel halfway around the world while the truth is putting on its shoes”

Mark Twain

Imagine a deepfake of Warren Buffet saying that he has divested his stocks in Coca-Cola or Elon Musk saying that Tesla’s are death traps. Once those lies go out into the world the damage will be done, markets will correct but a lot of people will have made some really bad decisions based on a lie. In my opinion the biggest implication of deepfakes is the manipulation of things like; markets, politics, and social sentiment. The implications and risks of deepfake technology outweigh any potential gains. This is my opinion and I would love to hear yours, please drop me a note and let me know what you think.